Expdp/impdp estimate the dump size without real export.

expdp \'/ as sysdba\' directory=DATAPUMP_DIR full=y ESTIMATE_ONLY=y

expdp \'/ as sysdba\' directory=DATAPUMP_DIR full=y ESTIMATE_ONLY=y

As you know expdp is a logical backup not the physical one. It works in same version also you can expdp/impdp from lower to higher version. Oracle is very restricted expdp/impdp from higher to lower version.

There are few important things you should keep in mind.

1. Create directory, if already not, and ensure relevant user got grant to read, write the directory. This directory will be used to store the dump and log file.

CREATE OR REPLACE DIRECTORY DIR_NAME AS ‘/ora01/db/oracle/’;

GRANT READ, WRITE,EXECUTE ON DIRECTORY DIR_NAME TO scott;

2. Whenever you need to export online database/schema ensure you will use CONSISTENT=Y (in 11.2). If need you can use SCN or time using option FLASHBACK_TIME.

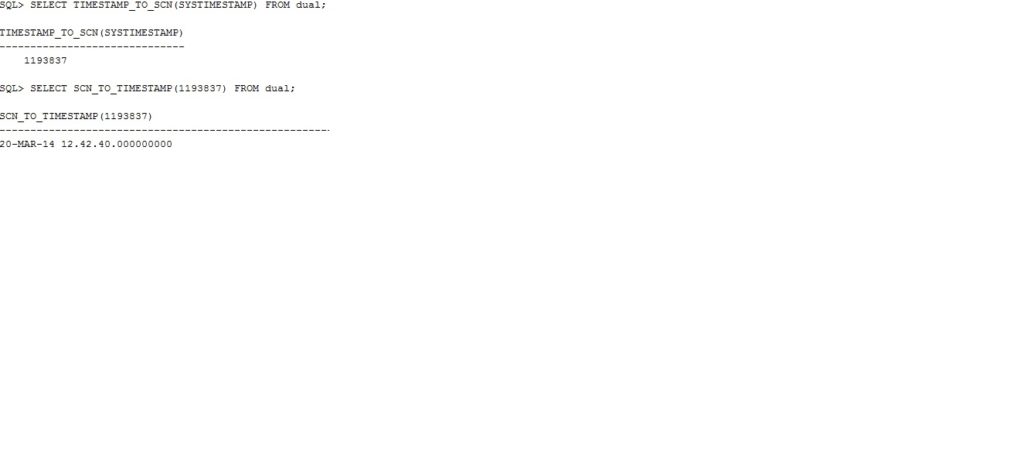

Check SCN/TIMESTAMP:

OR use below syntax. It will ensure your expdp will be consistent.

Note: It has been used many time successfully.

expdp \"/ as sysdba\" FLASHBACK_TIME=\"TO_TIMESTAMP\(TO_CHAR\(SYSDATE,\'YYYY-MM-DD HH24:MI:SS\'\),\'YYYY-MM-DD HH24:MI:SS\'\)\"

Akismet Anti-Spam

Activate | Delete

Used by millions, Akismet is quite possibly the best way in the world to protect your blog from spam. It keeps your site protected even while you sleep. To get started: activate the Akismet plugin and then go to your Akismet Settings page to set up your API key.

Version 4.1.1 | By Automattic | View details

Select All In One SEO Pack

All In One SEO Pack

Activate | Delete

Out-of-the-box SEO for your WordPress blog. Features like XML Sitemaps, SEO for custom post types, SEO for blogs or business sites, SEO for ecommerce sites, and much more. More than 50 million downloads since 2007.

Version 2.12 | By Michael Torbert | View details

Select All-in-One WP Migration

All-in-One WP Migration

Activate | Delete

Migration tool for all your blog data. Import or Export your blog content with a single click.

Version 6.91 | By ServMask | View details

Select BackUp WD

BackUp WD

Activate | Delete

Backup WD is an easy-to-use, fully functional backup plugin that allows to backup your website.

SET SERVEROUTPUT ON SIZE 1000000

BEGIN

FOR cur_rec IN (SELECT object_name, object_type

FROM user_objects

WHERE object_type IN ('TABLE','SYNONYM','VIEW', 'PACKAGE', 'PROCEDURE', 'FUNCTION', 'SEQUENCE', 'TYPE')) LOOP

BEGIN

IF cur_rec.object_type = 'TABLE' THEN

EXECUTE IMMEDIATE 'DROP ' || cur_rec.object_type || ' "' || cur_rec.object_name || '" CASCADE CONSTRAINTS';

ELSE

EXECUTE IMMEDIATE 'DROP ' || cur_rec.object_type || ' "' || cur_rec.object_name || '"';

END IF;

EXCEPTION

WHEN OTHERS THEN

DBMS_OUTPUT.put_line('FAILED: DROP ' || cur_rec.object_type || ' "' || cur_rec.object_name || '"');

END;

END LOOP;

END;

/

PURGE RECYCLEBIN;

SET ERRORLOGGING OFF

$ORACLE_HOME/rdbms/admin

| Script Name | Description |

|---|---|

| addmrpt.sql | Automatic Database Diagnostic Monitor (ADDM) report |

| addmrpti.sql | Automatic Database Diagnostic Monitor (ADDM) report |

| addmtmig.sql | Post upgrade script to load new ADDM task metadata tables for task migration. |

| ashrpt.sql | Active Session History (ASH) report |

| ashrpti.sql | Active Session History (ASH) report. RAC and Standby Database support added in 2008. |

| ashrptistd.sql | Active Session History (ASH) report helper script for obtaining user input when run on a Standby. |

| awrblmig.sql | AWR Baseline Migration |

| awrddinp.sql | Retrieves dbid,eid,filename for SWRF and ADDM Reports |

| awrddrpi.sql | Workload Repository Compare Periods Report |

| awrddrpt.sql | Produces Workload Repository Compare Periods Report |

| awrextr.sql | Helps users extract data from the AWR |

| awrgdinp.sql | AWR global compare periods report input variables |

| awrgdrpi.sql | Workload repository global compare periods report |

| awrgdrpt.sql | AWR global differences report |

| awrginp.sql | AWR global input |

| awrinfo.sql | Outputs general Automatic Workload Repository (AWR) information such as the size and data distribution |

| awrinput.sql | Common code used for SWRF reports and ADDM |

| awrload.sql | Uses DataPump to load information from dump files into the AWR |

| awrrpt.sql | Automated Workload Repository (AWR) report |

| awrrpti.sql | Automated Workload Repository (AWR) report |

| awrsqrpi.sql | Reports on differences in values recorded in two different snapshots |

| awrsqrpt.sql | Produces a workload report on a specific SQL statement |

| catalog.sql | Builds the data dictionary views |

| catblock.sql | Creates views that dynamically display lock dependency graphs |

| catclust.sql | Builds DBMS_CLUSTDB built-in package |

| caths.sql | Installs packages for administering heterogeneous services |

| catio.sql | Allows I/O to be traced on a table-by-table basis |

| catnoawr.sql | Script to uninstall AWR features |

| catplan.sql | Builds PLAN_TABLE$: A public global temporary table version of PLAN_TABLE. |

| dbfs_create_filesystem.sql | DBFS create file system script |

| dbfs_create_filesystem_advanced.sql | DBFS create file system script |

| dbfs_drop_filesystem.sql | DBFS drop file system |

| dbmshptab.sql | Permanent structures supporting DBMS_HPROF hierarchical profiler |

| dbmsiotc.sql | Analyzes chained rows in index-organized tables. |

| dbmspool.sql | Enables DBA to lock PL/SQL packages, SQL statements, and triggers into the shared pool. |

| dumpdian.sql | Allows one to dump Diana out of a database in a human-readable format (exec dumpdiana.dump(‘DMMOD_LIB’);) |

| epgstat.sql | Shows various status of the embedded PL/SQL gateway and the XDB HTTP listener. It should be run by a user with XDBADMIN and DBA roles. |

| hangdiag.sql | Hang analysis/diagnosis script |

| prgrmanc.sql | Purges from RMAN Recovery Catalog the records marked as deleted by the user |

| recover.bsq | Creates recovery manager tables and views … read the header very carefully if considering performing an edit |

| sbdrop.sql | SQL*PLUS command file drop user and tables for readable standby |

| sbduser.sql | SQL*Plus command file to DROP user which contains the standby statspack database objects |

| sbreport.sql | This script calls sbrepins.sql to produce standby statspack report. It must run as the standby statspack owner, stdbyperf |

| scott.sql | Creates the SCOTT schema objects and loads the data |

| secconf.sql | Secure configuration script: Laughable but better than the default |

| spauto.sql | SQL*PLUS command file to automate the collection of STATPACK statistics |

| spawrrac.sql | Generates a global AWR report to report performance statistics on all nodes of a cluster |

| spcreate.sql | Creates the STATSPACK user, table, and package |

| sppurge.sql | Purges a range of STATSPACK data |

| sprepcon.sql | STATSPACK report configuration. |

| sprepsql.sql | Defaults the dbid and instance number to the current instance connected-to, then calls sprsqins.sql to produce the standard Statspack SQL report |

| sprsqins.sql | STATSPACK report. |

| sql.bsq | Drives the creation of the Oracle catalog and data dictionary objects. |

| tracetab.bsq | Creates tracing table for the DBMS_TRACE built-in package. |

| userlock.sql | Routines that allow the user to request, convert and release locks. |

| utlchain.sql | Creates the default table for storing the output of the analyze list chained rows command. |

| utlchn1.sql | Creates the default table for storing the output of the analyze list chained rows command. |

| utlconst.sql | Constraint check utility to check for valid date constraints. |

| utldim.sql | Build the Exception table for DBMS_DIMENSION.VALIDATE_DIMENSION. |

| utldtchk.sql | This utility script verifies that a valid database object has correct dependency$ timestamps for all its parent objects. Violation of this invariant can show up as one of the following:Invalid dependency references [DEP/INV] in library cache dumps ORA-06508: PL/SQL: could not find program unit being calledPLS-00907: cannot load library unit %s (referenced by %s)ORA-00600[ kksfbc-reparse-infinite-loop] |

| utldtree.sql | Shows objects recursively dependent on given object. |

| utledtol.sql | Creates the outline table OL$, OL$HINTS, and OL$NODES in a user schema for working with stored outlines |

| utlexcpt.sql | Builds the Exception table for constraint violations. |

| utlexpt1.sql | Creates the default table (EXCEPTIONS) for storing exceptions from enabling constraints. Can handle both physical and logical rowids. |

| utlip.sql | Can be used to invalidate all existing PL/SQL modules (procedures, functions, packages, types, triggers, views) in a database so that they will be forced to be recompiled later either automatically or deliberately. |

| utllockt.sql | Prints the sessions in the system that are waiting for locks, and the locks they are waiting for. |

| utlpwdmg.sql | Creates the default Profile password VERIFY_FUNCTION. |

| utlrdt.sql | recompiles all DDL triggers in UPGRADE mode at the end of one of three operations:DB upgradeutlirp to invalidate and recompile all PL/SQLdbmsupgnv/dbmsupgin to convert PL/SQL to native/interpreted |

| utlrp.sql | Recompiles all invalid objects in the database. |

| utlscln.sql | Copies a snapshot schema from another snapshot site |

| utlsxszd.sql | Calculates the required size for the SYSAUX tablespace. |

| utltkprf.sql | Grants public access to all views used by TKPROF with verbose=y option |

| utluiobj.sql | Outputs the difference between invalid objects post-upgrade and those objects that were invalid preupgrade |

| utlu112i.sql | Provides information about databases prior to upgrade (Supported releases: 9.2.0, 10.1.0 and 10.2.0) |

| utlvalid.sql | Creates the default table for storing the output of the analyze validate command on a partitioned table |

| utlxaa.sql | Defines a user-defined workload table for SQL Access Advisor. The table is used as workload source for SQL Access Advisor where a user can insert SQL statements and then specify the table as a workload source. |

| utlxmv.sql | Creates the MV_CAPABILITIES_TABLE for DBMS_MVIEW.EXPLAIN_MVIEW. |

| utlxplan.sql | Builds PLAN_TABLE: Required for Explain Plan, DBMS_XPLAN, and AUTOTRACE (replaced by catplan.sql) |

| utlxplp.sql | Displays Explain Plan from PLAN_TABLE using DBMS_XPLAN built-in. Includes parallel run information |

| utlxpls.sql | Displays Explain Plan from PLAN_TABLE using DBMS_XPLAN built-in. Does not include parallel query information |

| utlxrw.sql | Builds the REWRITE_TABLE for EXPLAIN_REWRITE tests |

| xdbvlo.sql | Validates XML DB schema objects |

| $ORACLE_HOME/sqlplus/admin | |

| glogin.sql | SQL*Plus global login “site profile” file. Add SQL*Plus commands here to executed them when en a user starts SQL*Plus and/or connects |

| plustrce.sql | Creates the PLUSTRACE role required to use AUTOTRACE |

| pupbld.sql | Creates PRODUCT_USER_PROFILE |

| $ORACLE_HOME/sysman/admin/scripts/db | |

| dfltAccPwd.sql | Checks for default accounts with default passwords |

| hanganalyze.sql | Hang analysis script for stand-alone databases |

| hanganalyzerac.sql | Hang analysis script for RAC clusters |

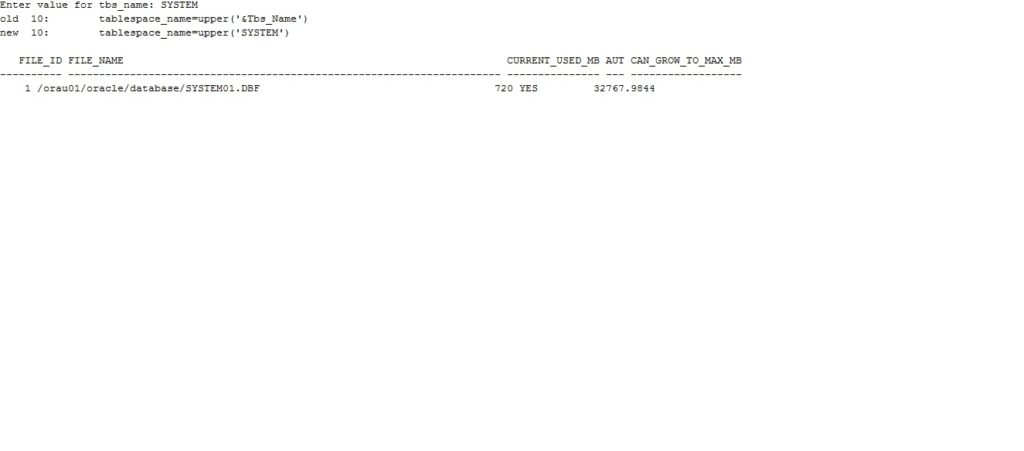

Given tablespace used, auto and max in Mb

set lines 200

set pagesize 150

break on report

compute sum of mbytes on report

col file_name format a70

select

file_id,

file_name,

bytes/1024/1024 CURRENT_USED_MB,

autoextensible,

maxbytes/1024/1024 CAN_GROW_TO_MAX_MB

from

dba_data_files

where

tablespace_name=upper('&Tbs_Name');

Sample output:

SET SERVEROUTPUT ON

declare

total_size_b number;

tfree_size_b number;

tused_size_b number;

begin

dbms_output.enable(100000);

select

Sum(bytes) into total_size_b

from

dba_data_files;

select

Sum(bytes) into tfree_size_b

from

dba_free_space;

select

Sum(bytes) into tused_size_b

from

dba_segments;

dbms_output.put_line('Total:' || TO_CHAR(Round(total_size_b/1048576, 2), '999,999.00') || ' MB');

dbms_output.put_line('Free: ' || TO_CHAR(Round(tfree_size_b/1048576, 2), '999,999.00') || ' MB');

dbms_output.put_line('Used: ' || TO_CHAR(Round(tused_size_b/1048576, 2), '999,999.00') || ' MB');

end;

/

SET SERVEROUTPUT OFF

Sample output:

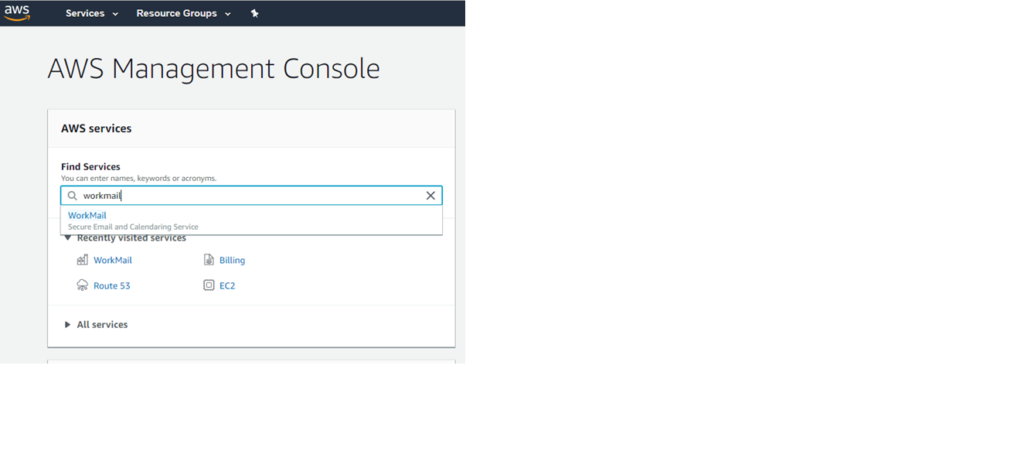

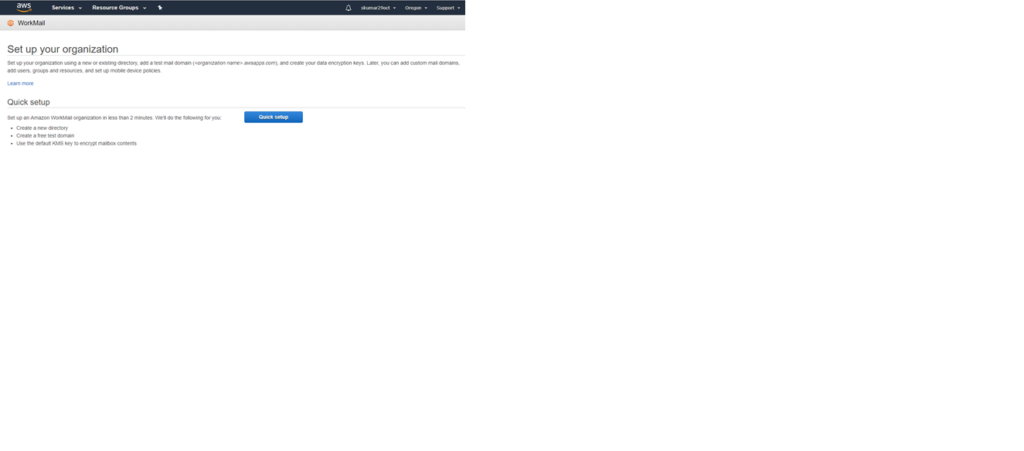

Email configuration is an important service for a website. But it will be easy if you are hosting your website on AWS and following below steps to set up your email address.

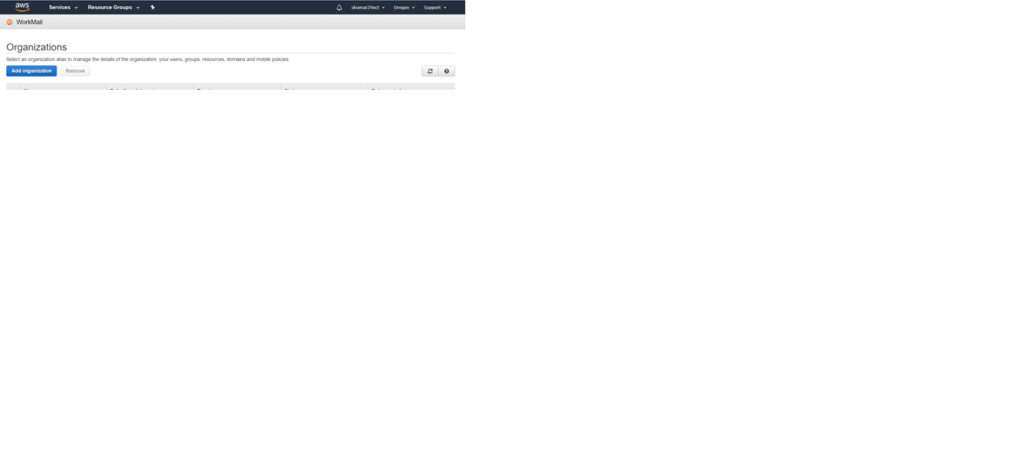

2. Click on add organization.

3. Click on Quick setup on next page.

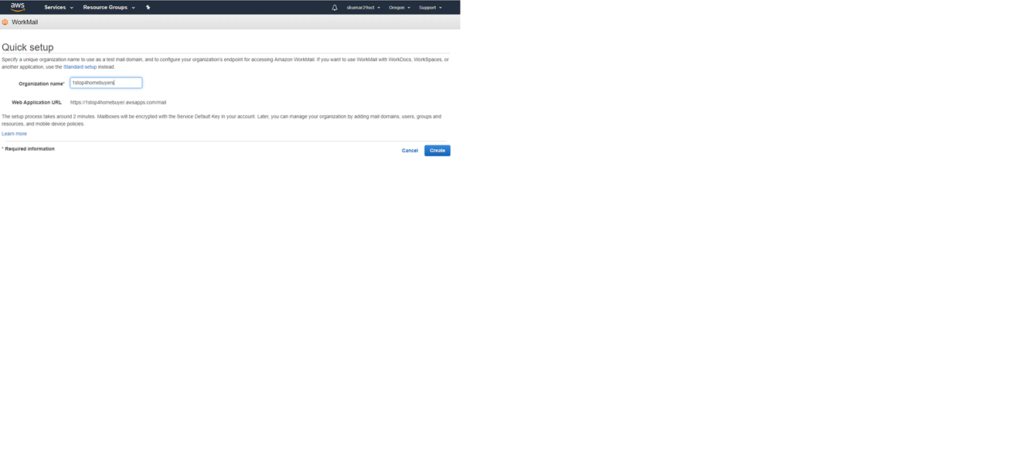

4. Give the domain name in organization.

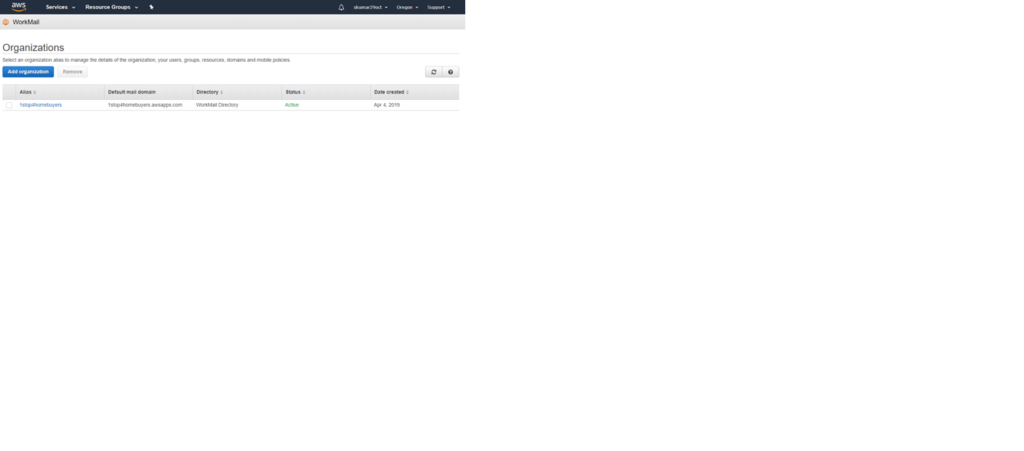

5. You can see it as below after the organization is created. It can take few min to be active.

Here click on name under “Alias”

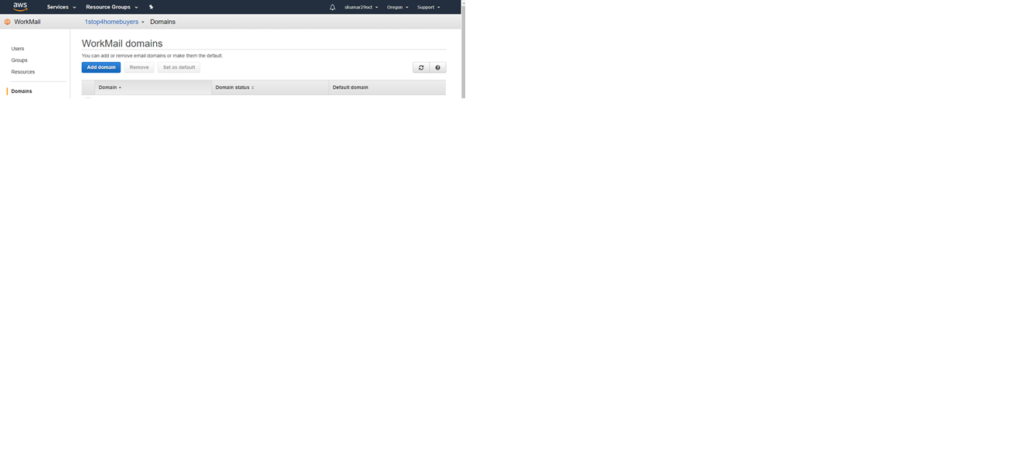

6. Click on Domain in Left side pane as below.

7. Click on Add domain.

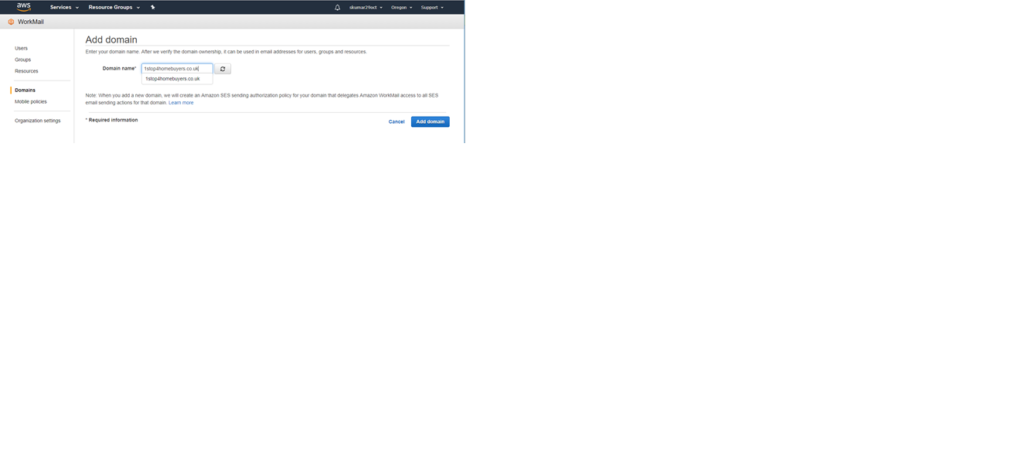

8. Give your registered domain name and click add domain.

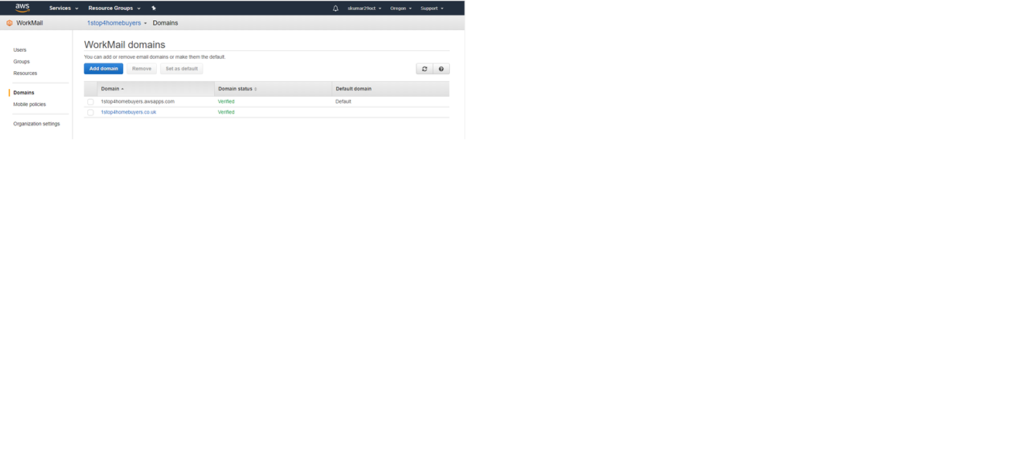

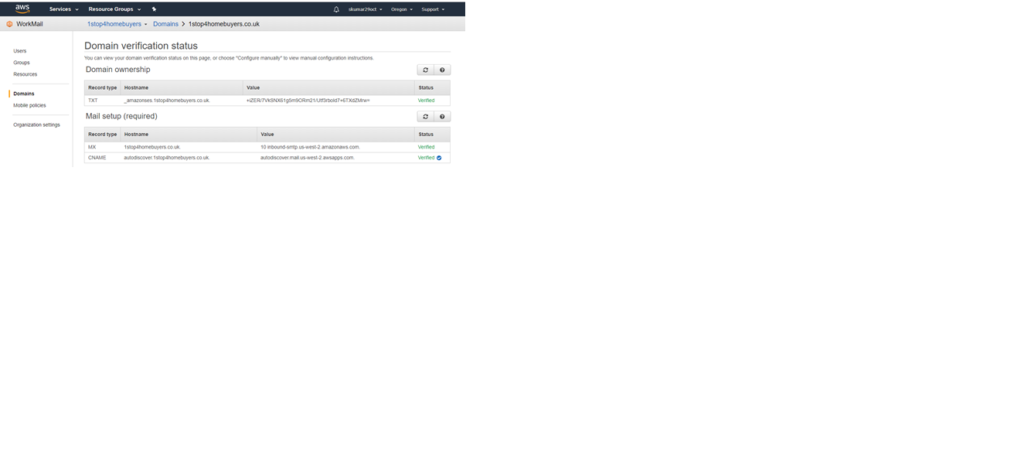

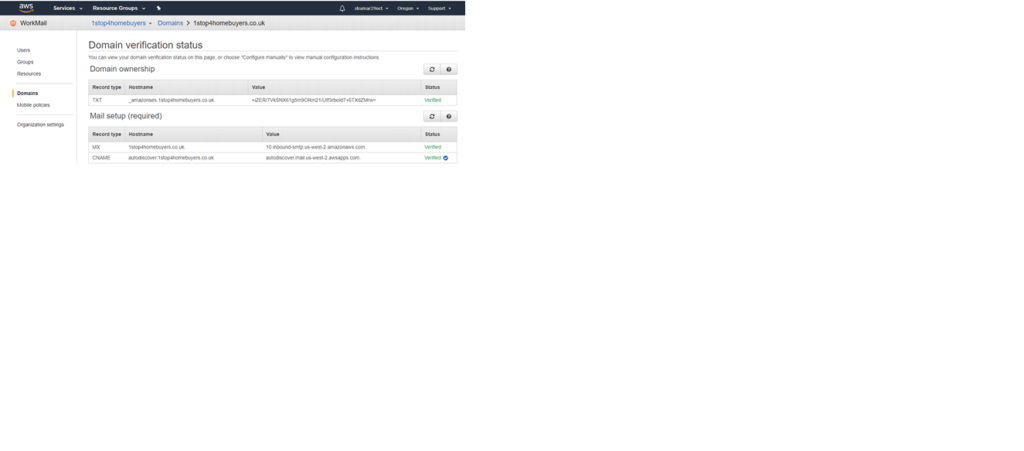

9.You can see the next screen as below, click on the domain name you have added. To verify the domain name, follow next 3 steps. It can take few min in the verification of the domain name after completion of the next 3 steps.

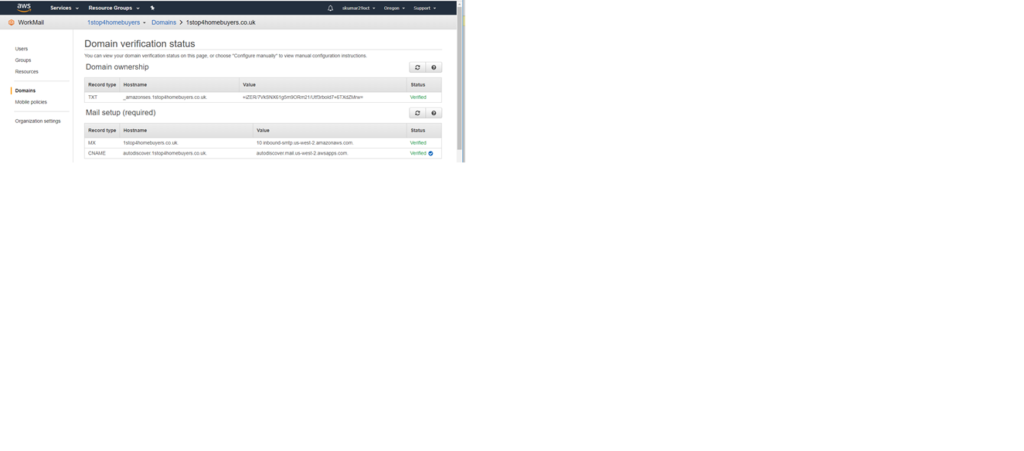

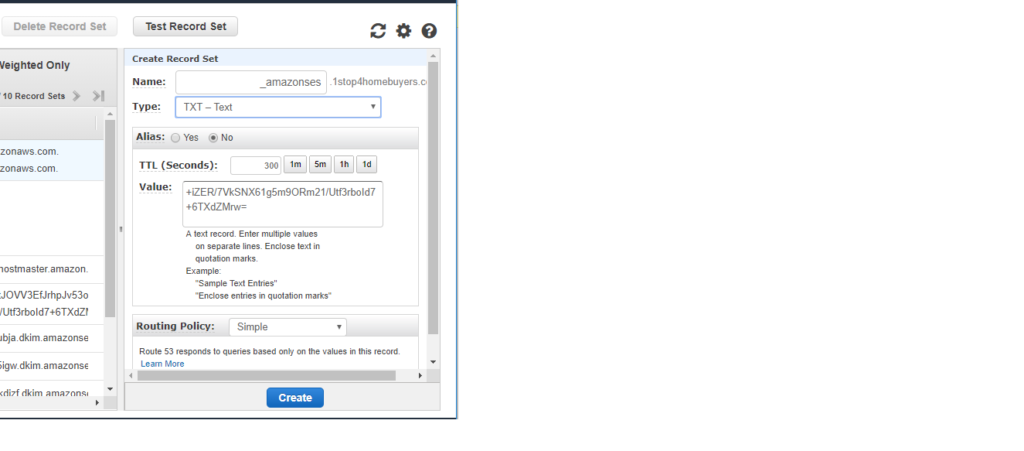

10. To Verify the domain name, From below screen under Domain ownership, copy the Record Type, Hostname without the domain name we added and the value. and use them in screen of Route 53 in step 14.

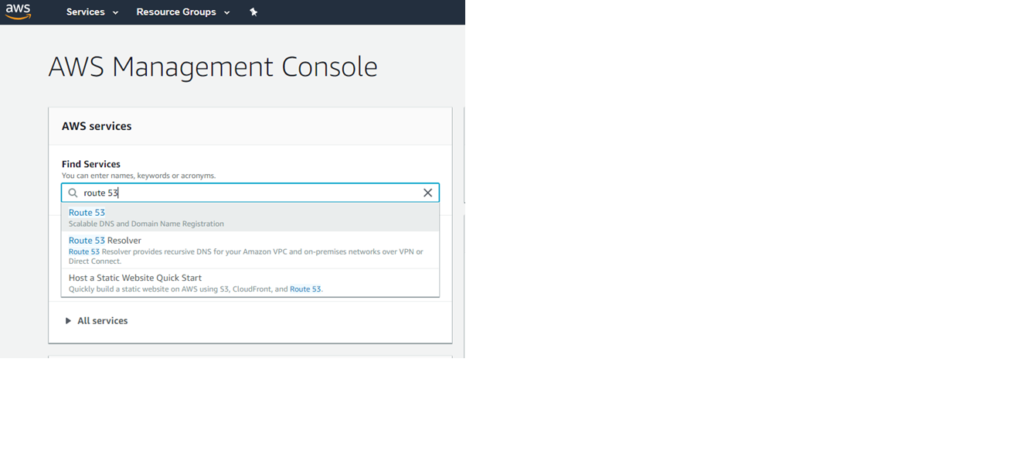

11. Open Route 53 service.

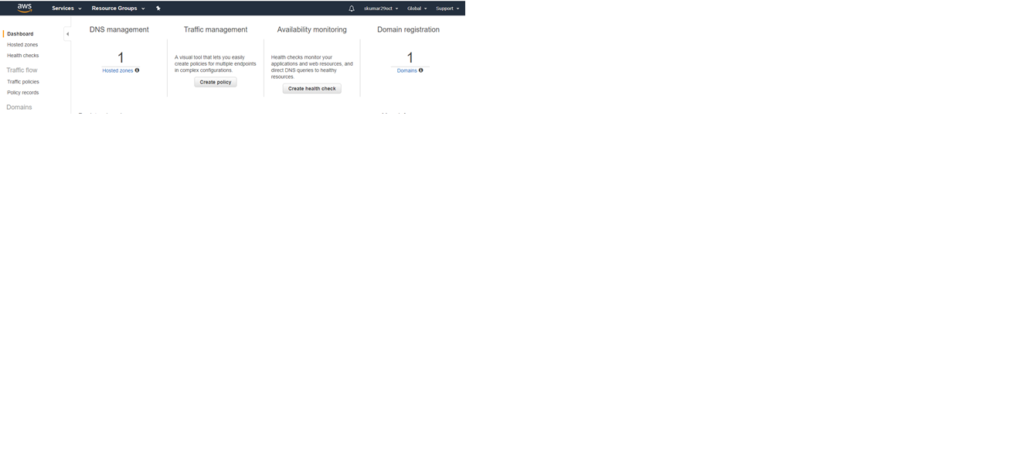

12. Click on Hosted Zones under DNS Management.

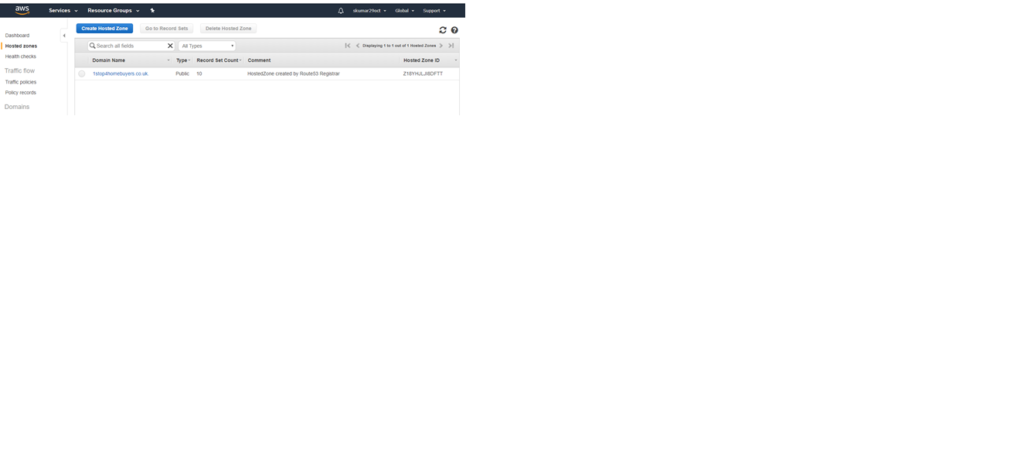

13. Click on domain name.

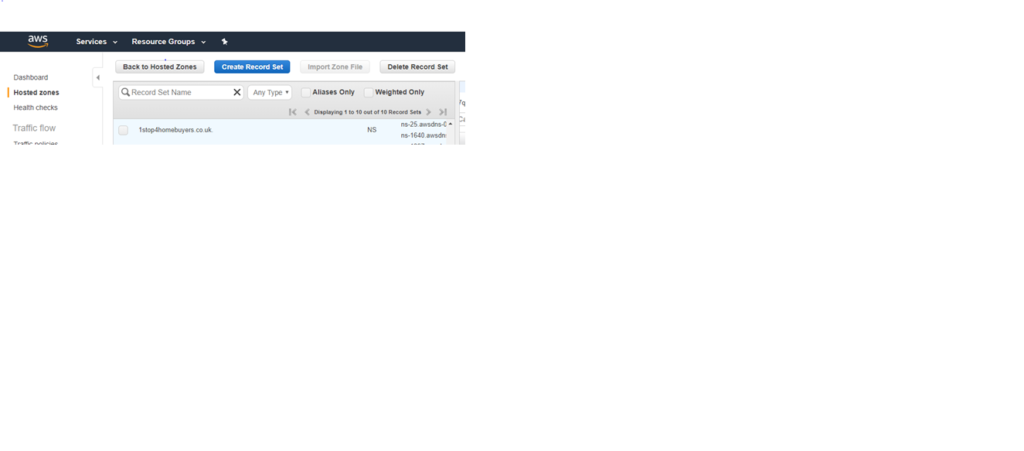

14. Click on Create record set and give values copied in step 10 to the next screen. Then click on Create button.

15. After the Domain name is verified or during the process we can add the records under mail set up on screen in step 10, same as step 14.

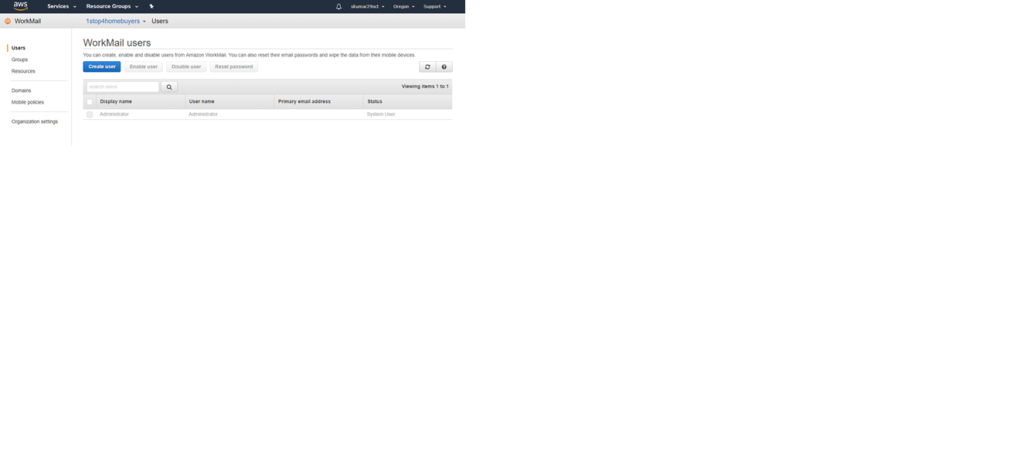

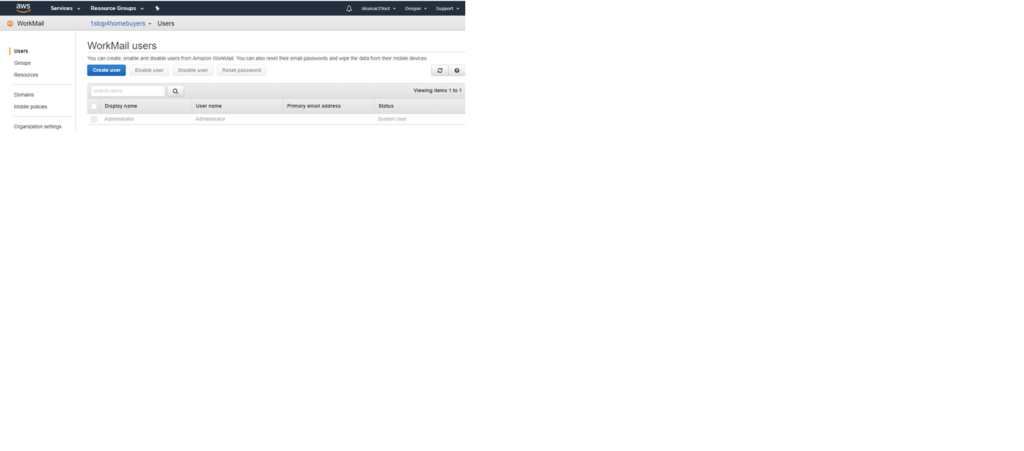

16. After all the record types in Work mail screen, go to user in work mail screen.

17. Click on Create User.

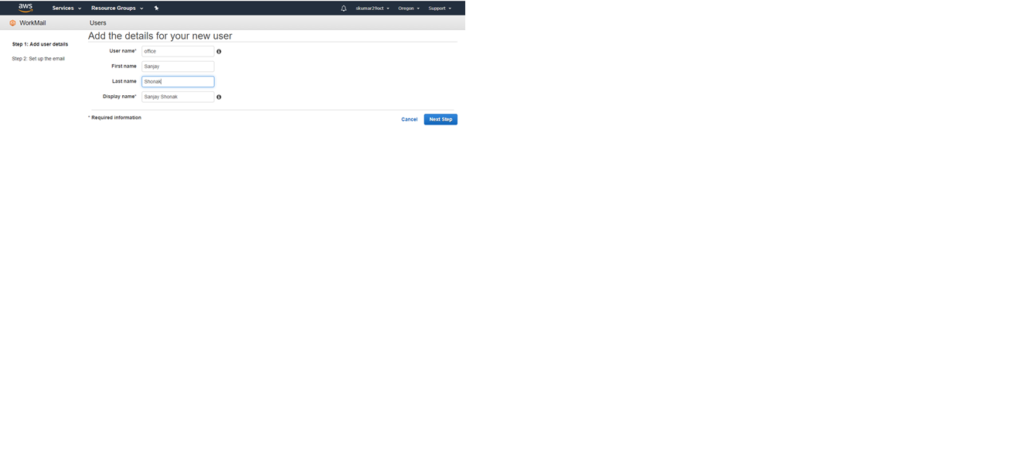

18. Give the required details as below and click on Next.

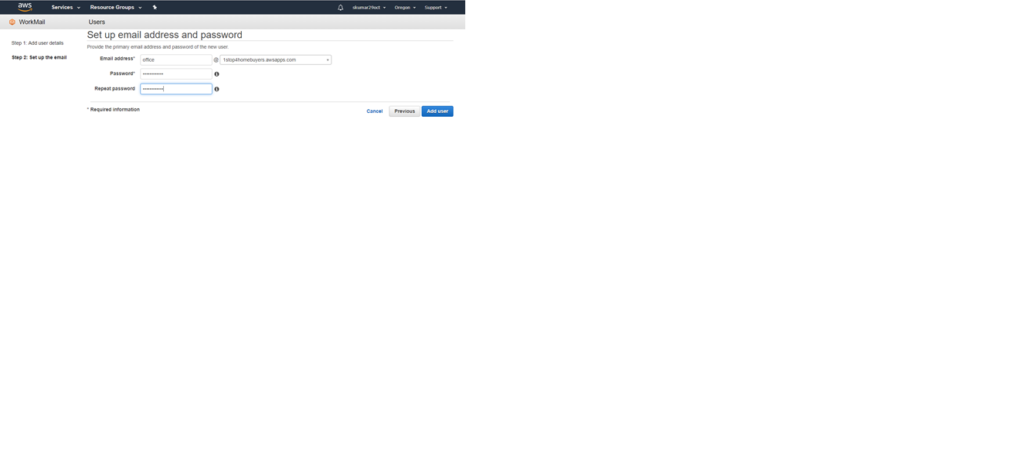

19. Give Password on next screen and click add user.

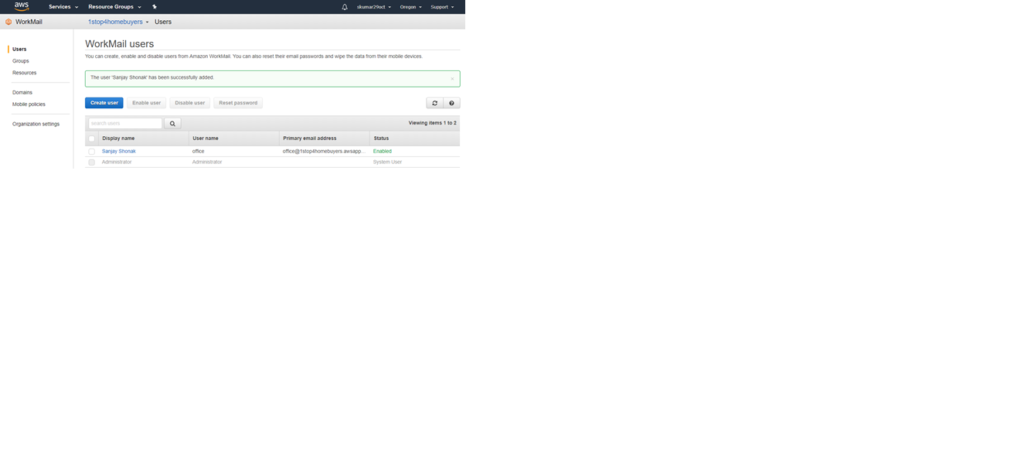

20. Next screen will be like this. Go to Organization settings.

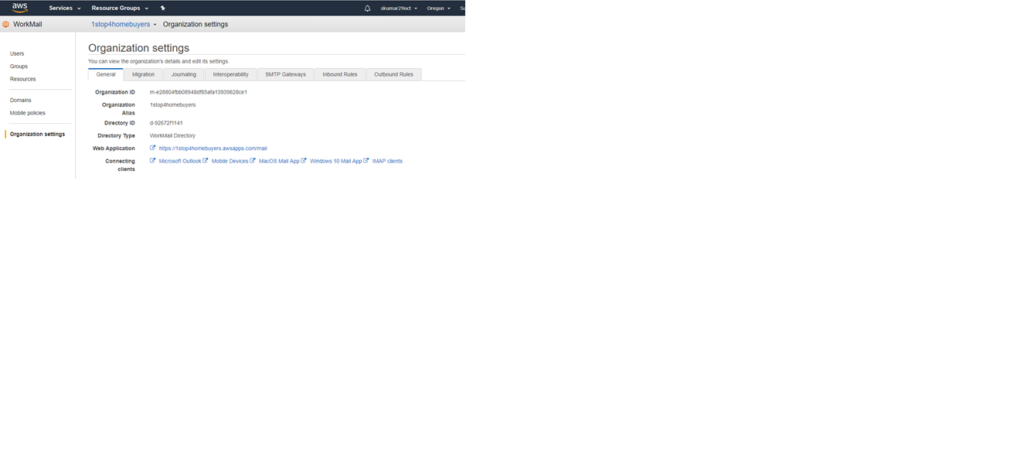

21. Click on Link ahead of web application.

22. Give Username and Password on next screen as below and click sign in.

23. Now you can send or receive the messages using this email address created.

Thank you for the reading. Please ask your questions in comment section.